What is lag, and how to deal with it? Introduction to Online games development

Lag is pretty common, especially when the Internet is involved. I will answer basic questions about lag and, more precisely, how it is involved when it comes to online gaming. I’m Mateusz Baran from PixelAnt Games, and I have six years of experience in multiplayer game development. While I don’t consider myself an expert, I am a passionate gamer. Therefore, I will write this article as someone passionate about online games – creating and playing them.

In the first part, I’ll explain latency’s definition and break it down into simpler parts for better understanding. Then, in the second part, I’ll share techniques to reduce latency’s impact on gameplay. I’ll illustrate these techniques using examples from games you might be familiar with or have played before.

Let’s start with the definition of latency. When someone mentions lag or latency, the immediate association is with network issues. Network latency is undoubtedly the most common type of lag. However, controversially, I believe game developers shouldn’t focus as heavily on network latency as we currently do.

What matters most to us as game developers is the end user experience—the sensation user feels while playing the game. Often, we can’t directly correlate network latency with this experience. But, let’s refer to a trusted source, Wikipedia, for insight: “lag (network lag) in video games refers to the delay (latency) between the user’s action (input) and the server’s reaction supporting the task, which must be sent back to the client.” This definition might seem complex, so let me simplify it: network latency arises because we send data to the server, it processes this data, and then sends a response back to our game client—naturally, this takes time.

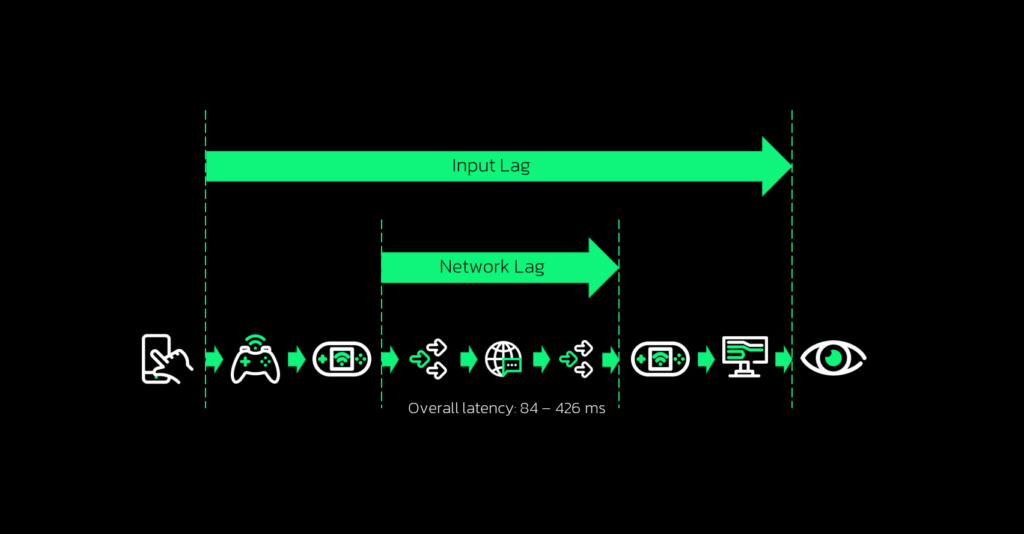

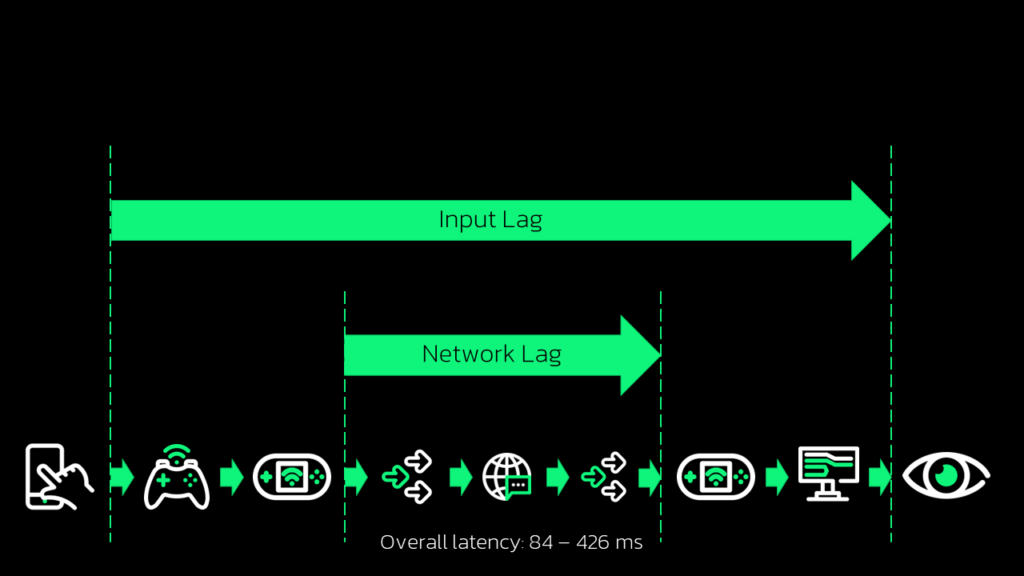

However, the definition of input latency is even more critical for us as game developers. Again, referencing Wikipedia: “in video games, the term is often used to describe any delay between input and the game engine, monitor, or any other part of the signal chain reacting to that input. All contributions of input lag are cumulative.” Allow me to put it in simpler terms: input latency refers to the time it takes from the user initiating an action to seeing the consequences of that action on the screen. It involves multiple steps. Let me walk you through these steps so you can grasp the nature of this latency more clearly.

The initial step involves the input device—a mouse, keyboard, or gamepad. Latency varies depending on the device used. For competitive gameplay, using wireless office gear might not be ideal due to inherent latency.

Next is our game client. It takes user input, packages it into network packets, and sends it over to the server. Despite data packing being a quick operation, latency (around 10 to 50 milliseconds) occurs due to netcode techniques aiming to reduce bandwidth, sending data at lower frequencies than the rendering framework’s frame rate.

Then comes the network transfer stage, impacted by variables like distance between your PC and the server, ISP, and connection type. Typically, for a well-adjusted setup, this latency ranges between 5 and 60 milliseconds, but in extreme cases, it could go into thousands.

The data reaches the server, which validates inputs, checks for cheating attempts or changes in context, then applies the user’s input to the server’s simulation. The result is sent back to the game client, following the same network rules.

Finally, the game client receives the server’s response and presents it to the user, which might take 50 to 150 milliseconds due to rendering engine processes.

The overall input lag, including output device delay (pixel response time, refresh rate, electronics delay), contributes to the end user experience. Network latency is a smaller section in this chain, prompting the suggestion that game developers should focus more on input latency than network latency.

After examining the entire process, we uncover aspects that might not be so apparent at first. Consider the rendering engine, for instance. Improving latency there can compensate for network constraints. This doesn’t imply neglecting network optimization; instead, it means enhancing the responsiveness for users experiencing higher network latency. This improvement doesn’t even require alterations to the network itself.

Let’s discuss numbers for a moment. Based on my assumptions, overall latency typically falls between 84 and 426 milliseconds. In practical situations, extreme values like these aren’t common. So, let’s consider an average of 255 milliseconds. Now, the question arises: is this low or high? What’s our target value? The answer varies, but here’s a rule of thumb: if your game’s input latency is lower than the player’s reaction time (which is around 200 milliseconds on average), that’s a good benchmark. It’s smart to aim for this or even a bit lower.

Some suggest aiming for 180 or 160 milliseconds. However, it depends on your game and audience. For a chess game, you might manage with over 500 milliseconds of input latency if you offer great features. But for fast-paced, competitive games, like those focusing on quick action and response, minimizing input latency becomes crucial, so it would be recommended to target lower values.

We understand the root of lag and its potential consequences: if left unaddressed, our average input delay could surpass the average human reaction time, leading to noticeable effects for many individuals. Let’s take a closer look at a few strategies aimed at improving perceived input latency.

DISTRACTION

The first technique I’ll discuss is distraction. Like street magicians, game developers divert attention. While server-side actions ensure a consistent game state, we can enhance the client-side experience. Adding animations, sound effects, and visuals is feasible, as long as it doesn’t disrupt the game state.

MOVEMENT INDICATORS

For instance, movement indicators in top-down games like Starcraft 2 or League of Legends provide local, animated cues after a mouse press. However, actual movement waits for the server’s response.

To demonstrate this, I disconnected the network. Despite seeing the local animations, the character in the game doesn’t respond because it awaits server input, which isn’t possible without an internet connection.

PROJECTILES

The technique’s next application involves projectiles, like rockets or grenades. Watching footage from Quake 3 Arena, you might not notice anything odd. Despite the game’s aged graphics, the gameplay seems normal. However, analyzing frame by frame reveals details. Before shooting, the frame includes distractions—a barrel explosion, weapon animation, recoil—but no projectile. Counting frames, it takes around seven frames (more than 100 milliseconds at 60 FPS) for the projectile to appear. This delay, coupled with input and output device latency, becomes noticeable to users. However, due to distractions, the delay doesn’t feel as pronounced.

CLIENT- SIDE PREDICTION

The next technique I’ll highlight is client-side prediction. This method involves simulating local objects by running similar simulations on both the client and server. By combining this data, we can anticipate the server’s response, allowing us to act on user input without waiting for network communication.

A common example is player movement, like in Overwatch 2. In the recorded footage, you’ll notice a box in the lower right corner displaying keystrokes. Examining frame by frame, we see the transition from no key pressed to the first frame after pressing the “A” key (which signifies moving left). By comparing these frames, we observe immediate but slight movement due to acceleration. This quick reaction occurs without waiting for network communication.

In top-down games, this slight delay is usually acceptable. However, in first-person games, such delay can be annoying or make the game unplayable. In these cases, implementing client-side prediction becomes crucial for a smoother experience.

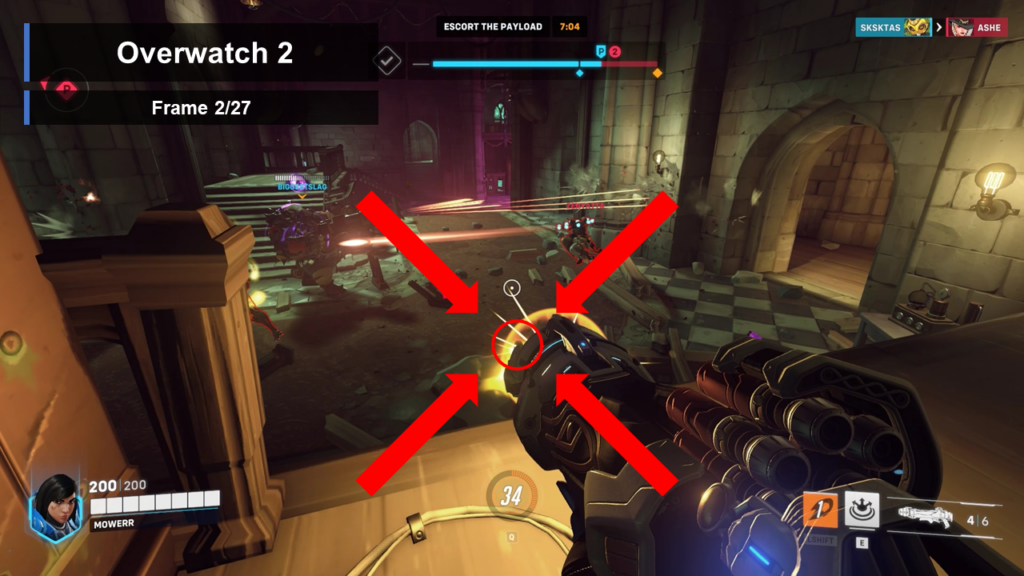

The next application of this technique might surprise you—it’s about projectiles again. In Quake III Arena, gameplay is decent, but there’s room for improvement. Modern games with characters and weapons shooting fast projectiles can benefit from this. The faster the projectile, the more noticeable the distance between the weapon and the projectile’s spawn position. This is where clever use of client-side prediction, seen in Overwatch 2, comes in.

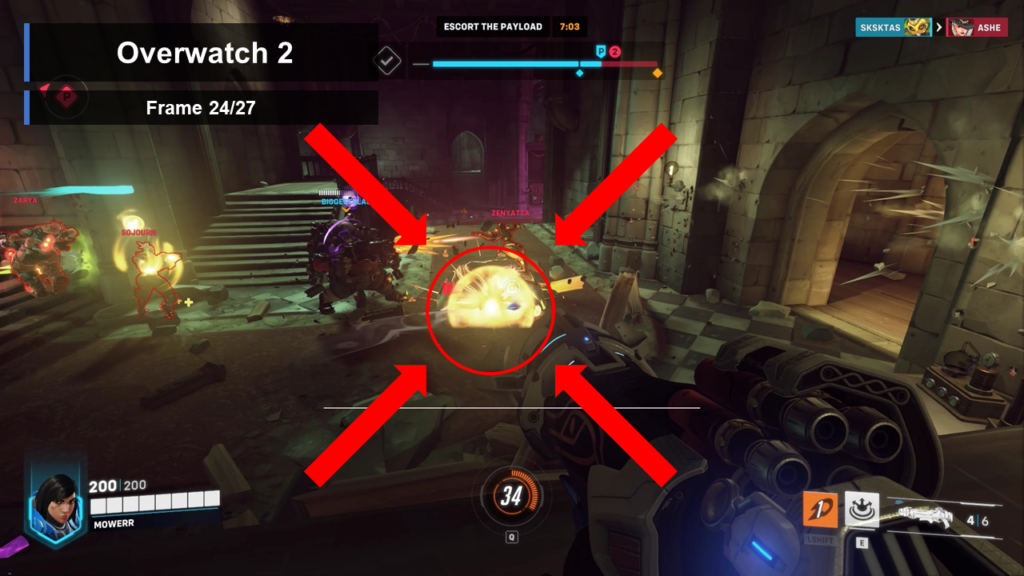

Comparing frames before and during projectile launch, we notice similar distractions, but a crucial difference—a shiny object representing the projectile. While shooting rockets is impactful, the process can be divided into spawning the rocket and its movement (suitable for client-side prediction), and the explosion and damage application (more critical for the game state, thus handled exclusively on the server side in Overwatch 2).

Watching the projectile’s path when it hits the ground, we count around 7 frames before the explosion appears, indicating no client-side prediction for the explosion itself. The crucial frame showing damage application occurs when the white line animation begins after eliminating an enemy (again, it appears 7 frames after the projectile hits the ground).

Let’s step back and consider what client-side prediction does to our input latency chain. It aligns closely with what distraction achieves—it makes the experience resemble single-player responsiveness, which is generally the most responsive for users in terms of input latency.

TELEPORTATION

Client-side prediction may cause some drawbacks, mainly the potential for mispredictions. When conflicts arise due to misprediction, a simple solution is teleportation—correcting the local object’s state based on accurate server data instantly. This correction might seem abrupt, but in most cases, the prediction is accurate, needing no conflict resolution. Even when resolution is necessary, it’s often negligible, barely noticeable at a fraction of a pixel.

There are scenarios when these corrections become visible, especially during network issues. However, during such times, visibility isn’t exclusive to client-side prediction but applies to various techniques due to the network’s degraded performance.

Consider Diablo 3 as an example when network issues occur. Focusing on the central character, client-side prediction enables free movement, yet the game server disagrees, resulting in the player repeatedly teleporting back to the starting position. This situation, while terrible for user experience, is common during network disruptions, a scenario many have encountered.

INTERPOLATION

For conflict resolution, interpolation offers a more refined approach. Instead of instant state changes, interpolation smoothly transitions between invalid and valid states provided by the server over a brief duration. While teleportation suffices for client-side predictions of local objects, interpolation shines for remote objects relying solely on periodic server data snapshots. It involves constantly adjusting local simulations based on updated server information, creating a chase toward the latest known state that’s never fully reached.

In Counter-Strike: Global Offensive (CS:GO), we witness an enemy being unnaturally pulled towards a ladder within a few frames, visibly demonstrating interpolation’s smooth transition despite the unexpected movement.

EXTRAPOLATION

Extrapolation involves a guessing game, predicting a remote object’s actions based on its recent behavior. While guessing isn’t the best algorithm, during delays affecting user experience, it becomes a plausible option. Racing games, especially during disconnections, suffer the most. For example, in Forza Horizon 5, when a car veers off track due to lost connection, the correction is highly noticeable.

Exploring this frame by frame, the moment the connection is lost, the game assumes the car will continue its movement in its recent direction and speed, resulting in the car going off-road. Upon correction, which occurs over a few frames, the car returns to the track. Interestingly, while the extrapolation itself isn’t visibly disruptive, the subsequent correction becomes apparent. The smooth simulation maintains the illusion that the opponent behaves erratically, trying to hit the nearest tree, but it’s the correction that stands out.

An example from Fortnite, the enemy is barely visible but appears to be running consistently towards the right side of the screen, showing signs of extrapolation—predicting their movement based on previous behavior. Suddenly, a visible correction occurs, and within a few frames, the opponent ends up on the roof.

LAG COMPENSATION

This leads us to the last technique: lag compensation. If distraction is being a street magician, this is more like being a time traveler—a more complex task, in my opinion. It’s a vital feature for most first-person shooter games using projectile-based weapons and can be valuable for melee-centric games like For Honor, compensating for latency in weapon collision calculations.

Let’s simplify this complex concept. Consider our enemy running to the right from the server’s viewpoint. Now, add our own perspective—what we see. Recall the concept of constantly trailing the server’s data, never quite reaching the real-time information. The real challenge arises when attempting to shoot this enemy in such a scenario.

Where do we aim? We aim where we see the enemy, right? But the problem is, that’s not where the server sees our enemy. Making things trickier, by the time the server gets data about us taking the shot, our opponent is even further away. Without lag compensation, the server would just compare and say, “You missed, no damage,” and move on with the simulation. But lag compensation fixes this.

It happens exclusively on the server side. Forget about our local view for now. The server rewinds time to see exactly what the shooter saw when taking the shot. It’s quite fast, almost instantaneous. It does the comparison, returns to the current state, and continues the simulation.

But that’s not the craziest part. There are more viewpoints at play. Our enemy probably uses client-side prediction, meaning they’re even ahead of the server. The key thing here is the distance between what our opponent sees and what we see. This difference can have serious consequences in games.

In Battlefield 1, let’s consider an example: an enemy rushes into a small room, we aim and shoot, applying damage a few frames later. From the shooter’s viewpoint, everything seems normal—we aimed directly, shot, and caused damage. However, for the player taking the damage, it seems unfair. They believe they’re safe in a covered room, yet they’re hit by an enemy they didn’t see. This technique, while a necessary evil, creates controversial situations but greatly enhances the shooting experience in first-person shooter games.

Imagine a world without this technique. We’d need to aim ahead of the enemy every time, adjusting based on network latency—a frustrating and impractical process. Game developers must consider the impact of such techniques, especially in Esports, to minimize controversies during important events.

The techniques I’ve discussed are just a part of what’s out there, chosen for their importance and prevalence in most online games, excluding lag compensation, which is more case-specific but still common. Ultimately, what matters most is the end user experience—the feeling players get while gaming. These techniques aim to improve that feeling, making them valuable additions to your games.

WATCH THE VIDEO: